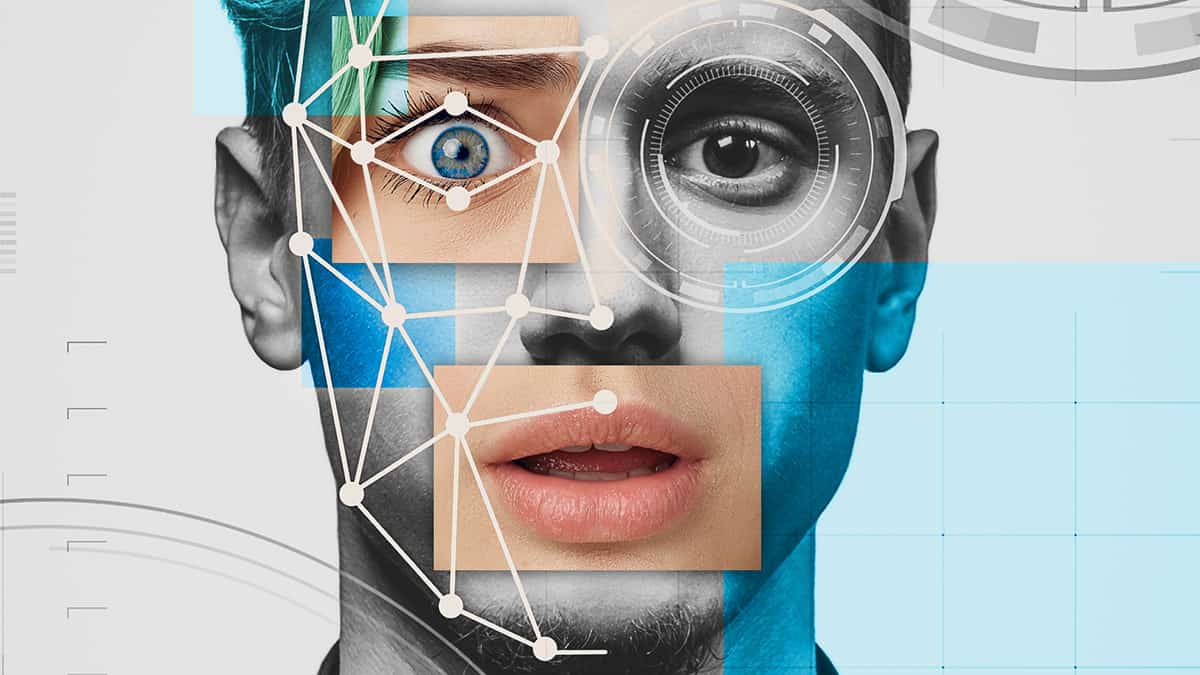

“Deepfake Technology” – An Overview

Deepfake technology much like the name suggests, manipulates media and can be essentially be called Photoshop for videos. You can change a person’s face or voice and even both. You can actually replace an actual person with AI generated faces or voices.

Although this technology sounds relatively new and high tech but it has actually just evolved into the shape you see it today. Think back to when it took a lot of time for camera crews to reach certain destination, especially for news. Many news outlets would then produce videos that would be used in the place of actual footage.

However, unlike then, we don’t need expertize to create deepfake videos; in fact, anyone with a stable internet connection can download software that will enable them to create masterpieces in their spare time.

But a not so homemade example of deepfake technology today, includes Princess Leia in the Star Wars franchise, the Rise of Skywalker. The character was initially brought to life using CGI for the earlier film Star Wars: Rogue One, which led to many fans hating on it and actually saying that the ending was spoiled for them.

Considering how realistic Princess Leia appears to be in the Rise of Skywalker, one can imagine that this technology can definitely have overarching affects if used for something sinister, with some people like Florida Republican Senator Marco Rubio calling them modern day nuclear bombs. I do not know how true that claim is but for now the only public use of deepfake technology has been putting people’s faces on porn stars and making politicians say funny things.

But there is no denying that deepfake technology can indeed be a deadly tool if it falls in the wrong hands or in the hands of those with sinister intentions. Think of the phenomenon of fake news but one step further. Imagine just not creating news stories that may be exaggerated or not entirely true but entire interviews with world leaders or other important people.

It will definitely lead to unpleasant consequences to say the least, as a CEO for a UK based energy firm would testify. The poor guy received a call from the parent company’s chief executive and was told to transfer 220,000 pounds into a Hungarian bank account. He complied only to find much to his horror that the call was a hoax engineered with deepfake technology powered voice impersonation.

Less sinister but with the potential to be, was the entire President Donald Trump and Jim Acosta fiasco from 2018. Although it was more an example of a shallow fake rather than a deepfake but it was enough to turn the actual events –a White House intern snatching the mike from Acosta- into something which looked like Acosta attacked her. This simply shows that even a small alteration made to the content can result in maligning anyone they wish. Another example is Nancy Pelosi’s interview being slowed down to make her sound sluggish, along with alterations to her voice so that it sounds normal.

It is predicted that in the coming future it would become more and more difficult to catch deepfake videos, especially since we become better at catching them, the deepfake content generating technology also keeps learning and getting better at fooling us. Yes you read that right. Deepfake technology essentially runs on generative adversarial networks or GANs. This technique was developed in 2014 and its algorithm makes use of two different AIs. One generates deepfake content and the other tries to spot the forgery. When the two AIs start, the content generator has no idea what the human form looks like so whatever alterations it makes are easily detectable by the spotter AI. However, as both AIs are also machine learning, the first AI gets better and better at fooling the second one, till the doctoring is also indistinguishable by AI two.

Even though the naked eye and AI two can both not see where a particular video has been doctored, not all hope is lost, as there are machines that can detect even the slightest of detail amiss. It could be a person on screen that hasn’t blinked in a while or even the shift in light.

It is a scary thought to view something and then not being able to believe your own eyes and perhaps it is this fear that will keep us open to the possibility of any video having the potential to of being fake and it would perhaps keep us more vigilant and eager to learn about deepfake technology so we have the means and tools to protect ourselves if we find ourselves in a deepfake bind or even better for us to be able to poke some fun at our friends.

-Nida Khan

Comments are closed